Creative Prediction

This is the website for the Creative Prediction project – a collection of walkthroughs and examples by Charles Martin and others for applying machine learning to creative data in interactive creativity systems such as interactive artworks and musical instruments.

The main content is a list of tutorials and exercises that work on your computer or in Google Colab, and some presentations and lectures about using machine learning with creative data. There’s also page about setting up your computer with Python and the right libraries if you want to run the code examples.

If you want to (or already) use machine learning in a creative artistic setting; this is the place for you!

About CrePre

Creative Prediction is about applying predictive machine learning models to creative data. The focus is on recurrent neural networks (RNNs), deep learning models that can be used to generate sequential and temporal data. RNNs can be applied to many kinds of creative data including text and music. They can learn the long-range structure from a corpus of data and “create” new sequences by predicting one element at a time. When embedded in a creative interface, they can be used for “predictive interaction” where a human collaborates with, influences, and is influenced by a generative neural network.

This site includes walkthroughs of the fundamental steps for training creative RNNs using live-coded demonstrations with Python code in Jupyter Notebooks.

Workshops

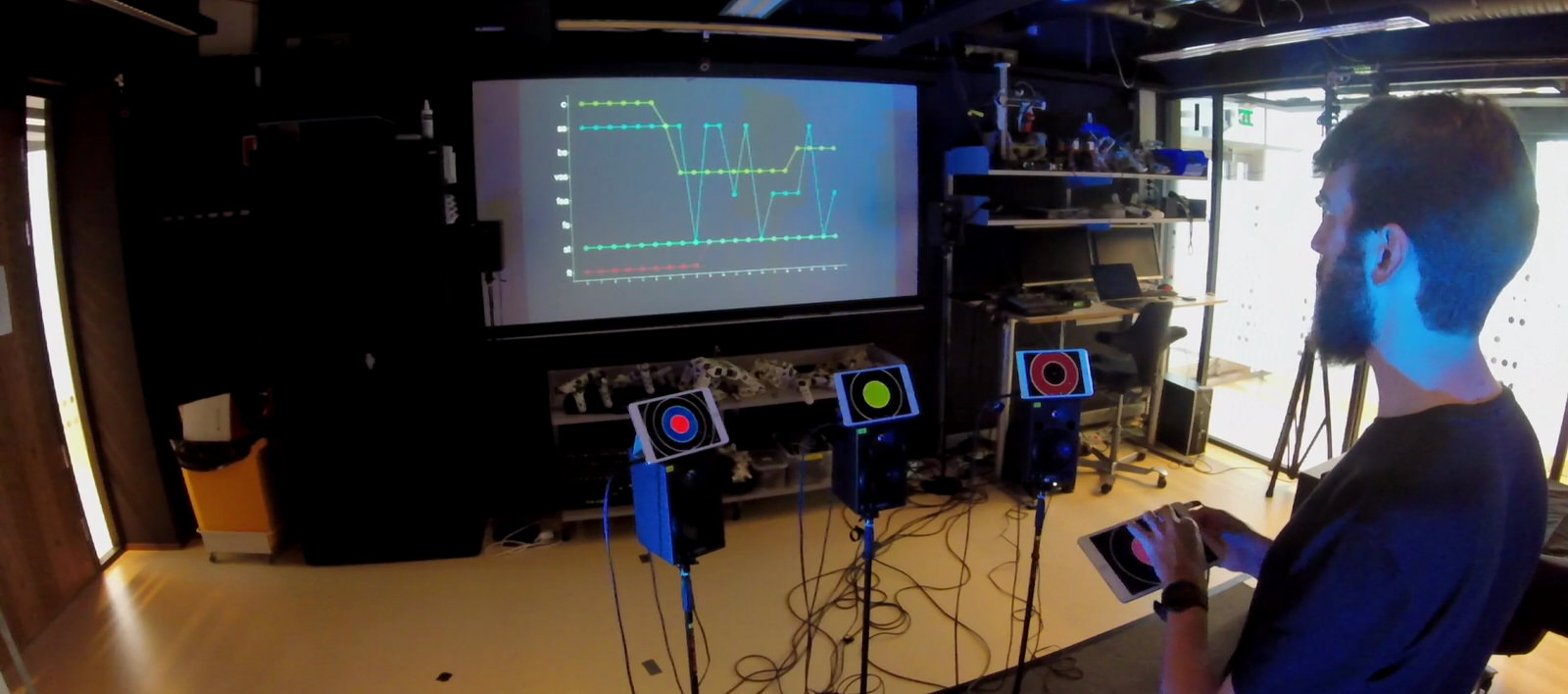

Creative Prediction workshops have been presented in Oslo, Tokyo, and Porto Alegre. The workshops involve lectures in machine learning for creative arts, and collaborative hacking sessions to start creating artistic prediction projects!