Creative Prediction with Neural Networks

A course in ML/AI for creative expression

Deep Learning in the Cloud

Charles Martin - The Australian National University

Why cloud for ML/AI?

- Not always convenient/cost effective to use big workstation.

- We like small laptops without hot GPUs and processors.

- We might want to move from research to product!

- The internet is cool/fun?

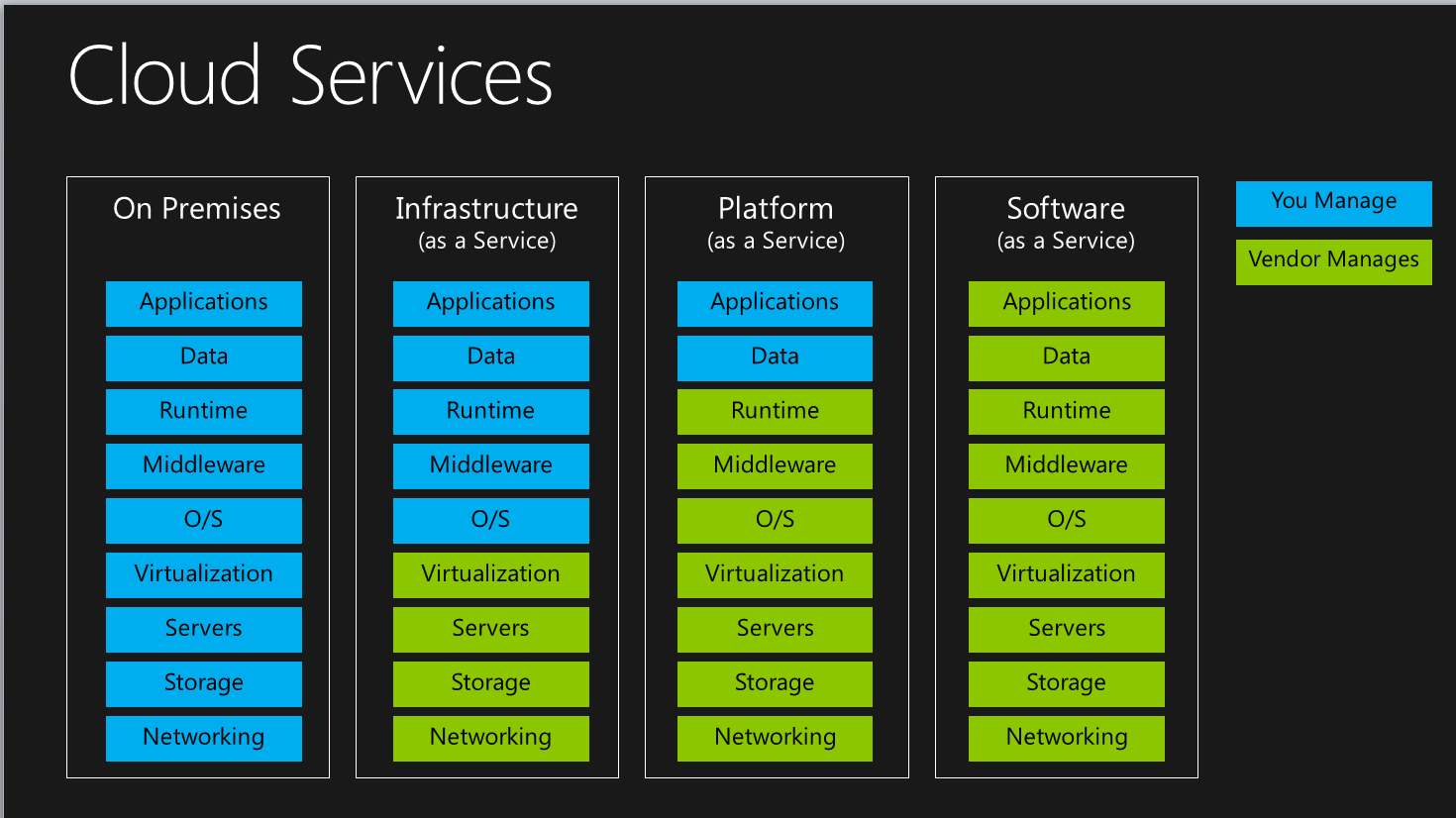

Cloud Models

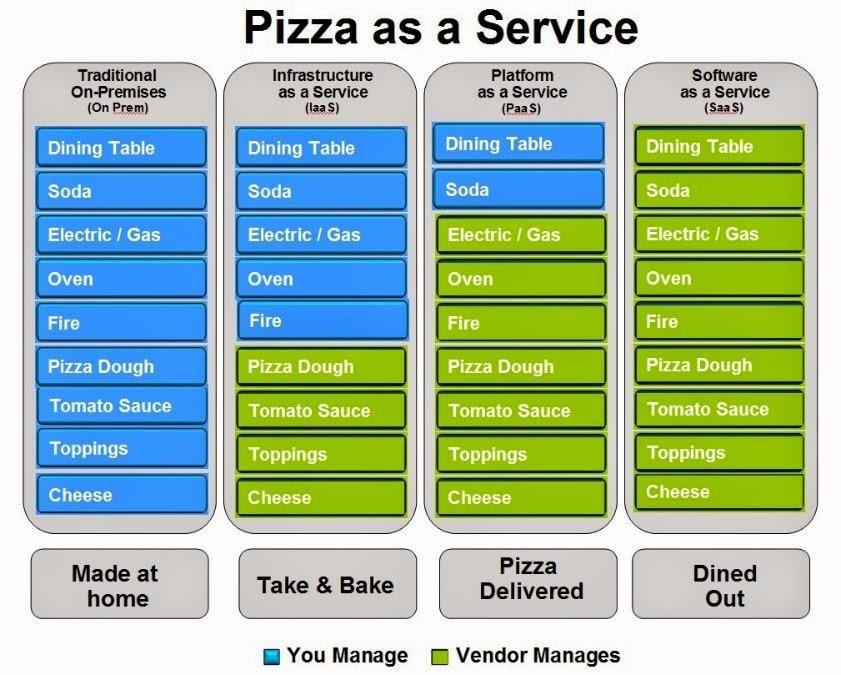

Pizza as a Service

What do we need?

- GPUs: Nvidia [GTX1080TI, K80, P100, V100] or… “Tensor Processing Units”

- OS: Linux?

- CUDA + CUDnn

- Python

- Python libraries: Tensorflow, Keras, SKLearn, etc.

- Jupyter

On Premises: Workstations

- Workstations (15-50KNOK)

- Pro: fun to play with

- Pro: good for small number of users

- Pro: one-time cost

- Con: not practical for many users

- Con: have to keep setting up eduroam

- Con: I don’t like sharing?

Infrastructure-as-a-Service

- Virtual servers

- Set up server, access via Linux shell

- Amazon Web Services (AWS)

- Google Cloud Platform (GCP)

- DigitalOcean (DO)

- UH Cloud (UiO)

Platform-as-a-Service

- Google Cloud Kubenetes Engine

- Deploy “Containerised” application to servers.

- (Deploy DL to Kubernetes)

- Sigma2 (UiO)

Software-as-a-Service

- Google Colaboratory (👏🏼)

- Kaggle Kernels

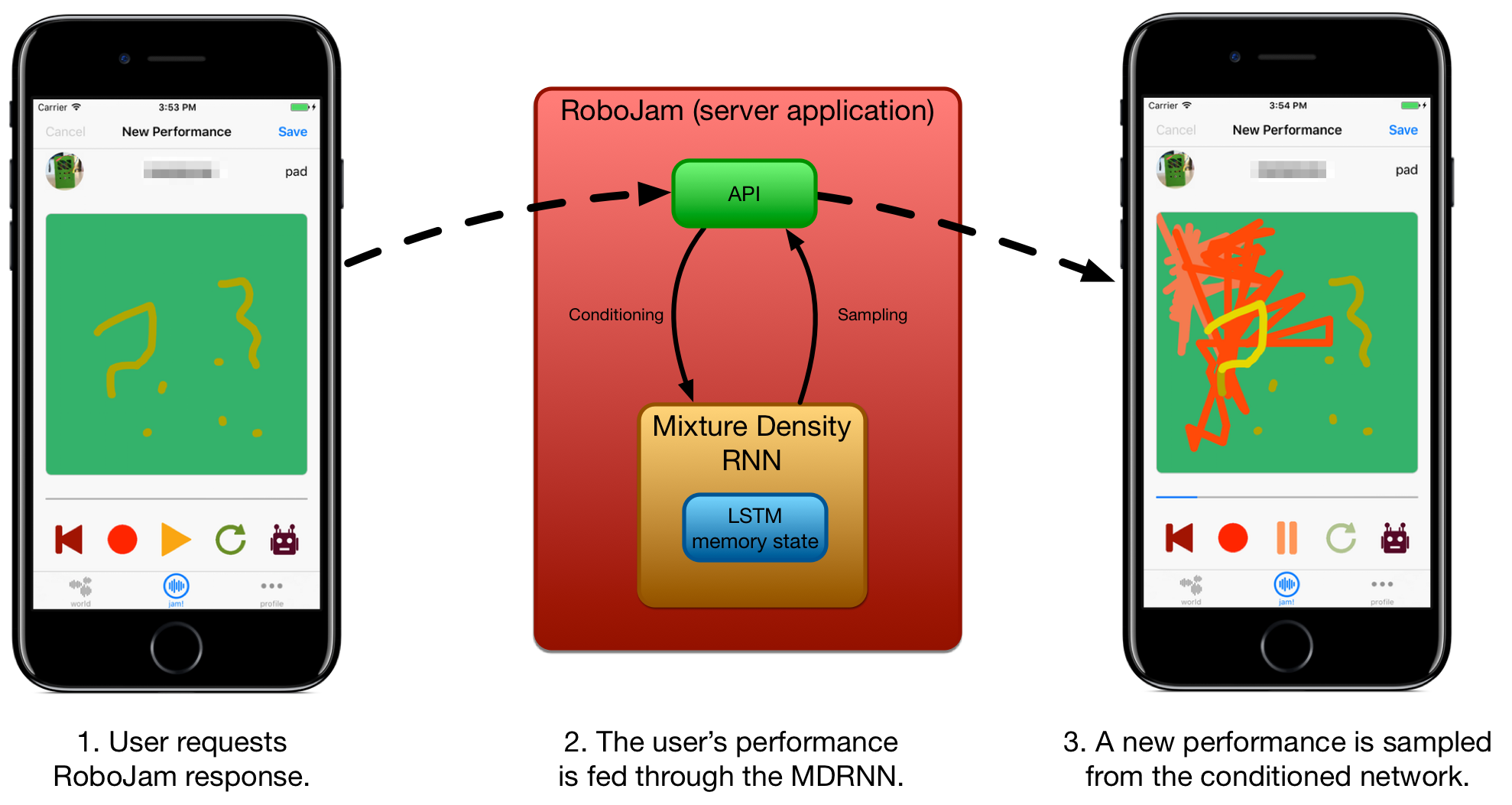

Example: Robojam

Example: Robojam

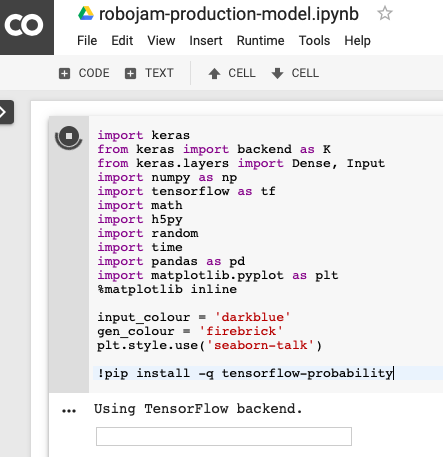

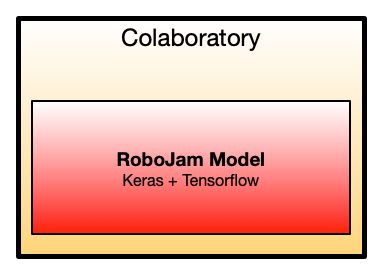

RoboJam is a Keras project, now deployed as a Flask web application.

Starting point: Local + SaaS

- Developed on local shared workstation

- Also worked on Colaboratory

- Tips:

- keep jupyter sessions around with

screen - tunnel jupyter port with

ssh -L 8888:localhost:8888 - Could also use Google Cloud VMs with GPUs for short training runs

Starting point: IaaS + PaaS

- Can use VMs and Containers for DL development

- Google’s “Cloud Deep Learning VM Image”

- Comes with

jupyterhubrunning to do development in a browser. - Expensive for a good machine: K80 GPU 0.45USD/h

SaaS Architecture on Colab

Production: Turning into a web service

- Used

flaskframework to create a RESTful web API - Just one endpoint:

https://0.0.0.0:5000/api/predict - Send performance as JSON to that endpoit

- robojam RNN model is conditioned with input, then a continuation is predicted.

- prediction returned as JSON

- “Deploying DL models with Flask”

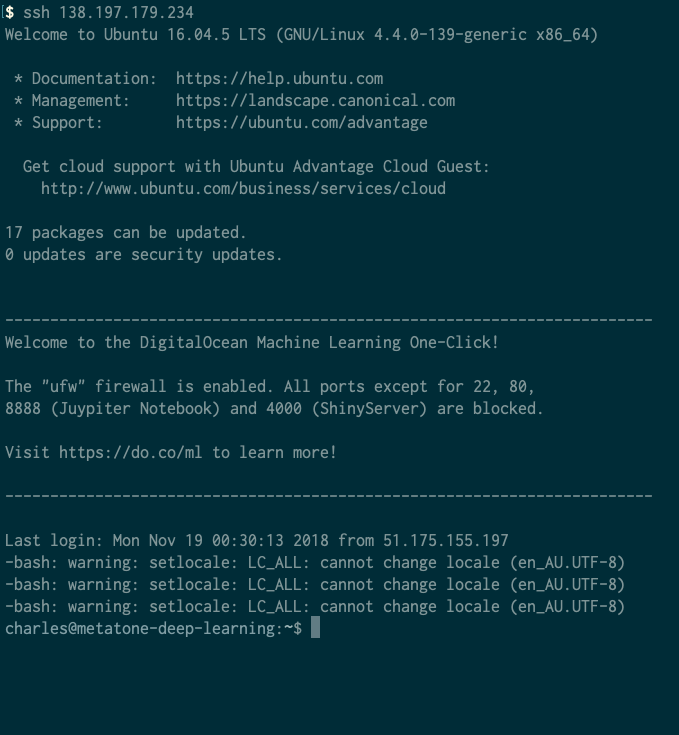

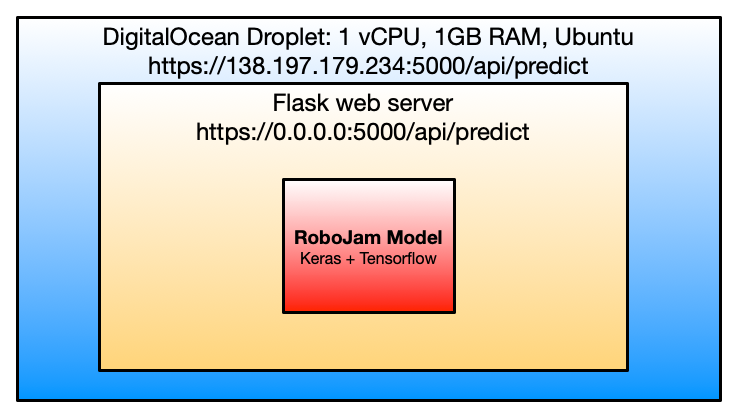

Production: Deploying to DigitalOcean

- Using cheapest DigitalOcean VM: 1vCPU, 1GB, $5 per month.

- Login, clone git repo, run server in a detached

screen. - Works! Deployed for about a year.

- Predictions take about 1.0s-1.5s, not too bad.

- Problem: what if the app gets popular?

IaaS Architecture on DigitalOcean

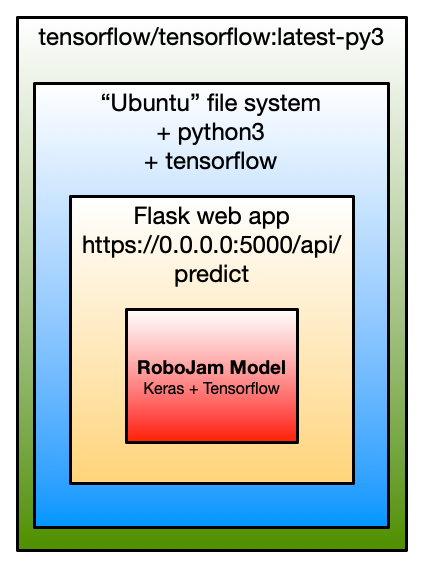

Containerising: Docker

- We want to make a “container” that includes Robojam and all necessary libraries to run on any Docker installation.

- We’ll start with the tensorflow docker which includes a development environment for tensorflow.

Containerising: Dockerfile

FROM tensorflow/tensorflow:latest-py3

MAINTAINER Charles Martin "[email protected]"

COPY requirements.txt /tmp/

RUN pip install --requirement /tmp/requirements.txt

COPY . /tmp/

WORKDIR /tmp

CMD [ "python", "./serve_tiny_performance_mdrnn.py" ]

Containerising: Building the container

sudo docker build -t robojam:latest .

docker tag robojam:latest charlepm/robojam:latest

docker push charlepm/robojam:latest

Containerising: Running the Container

docker run -d -p 5000:5000 robojam:latest

Containerised Architecture with Docker

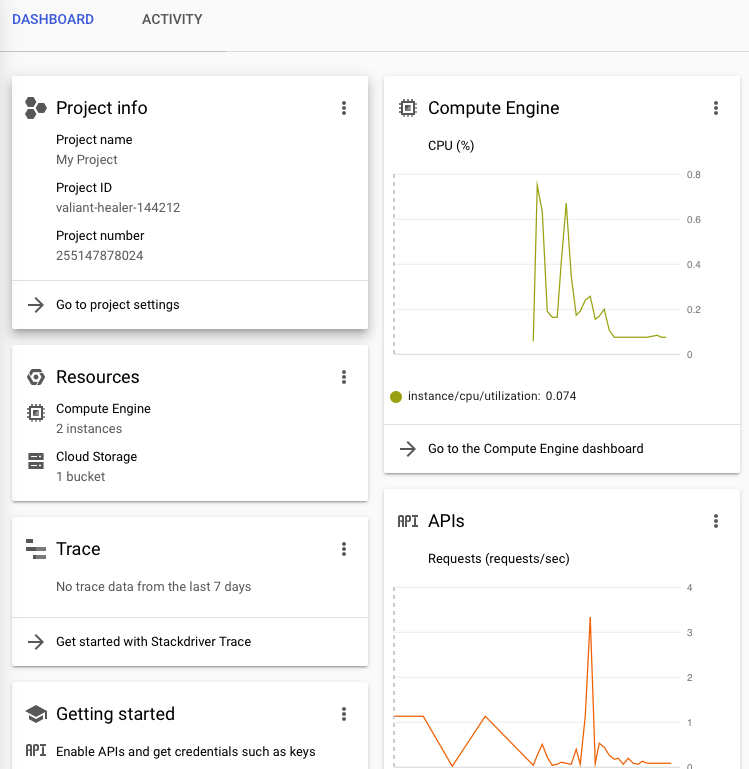

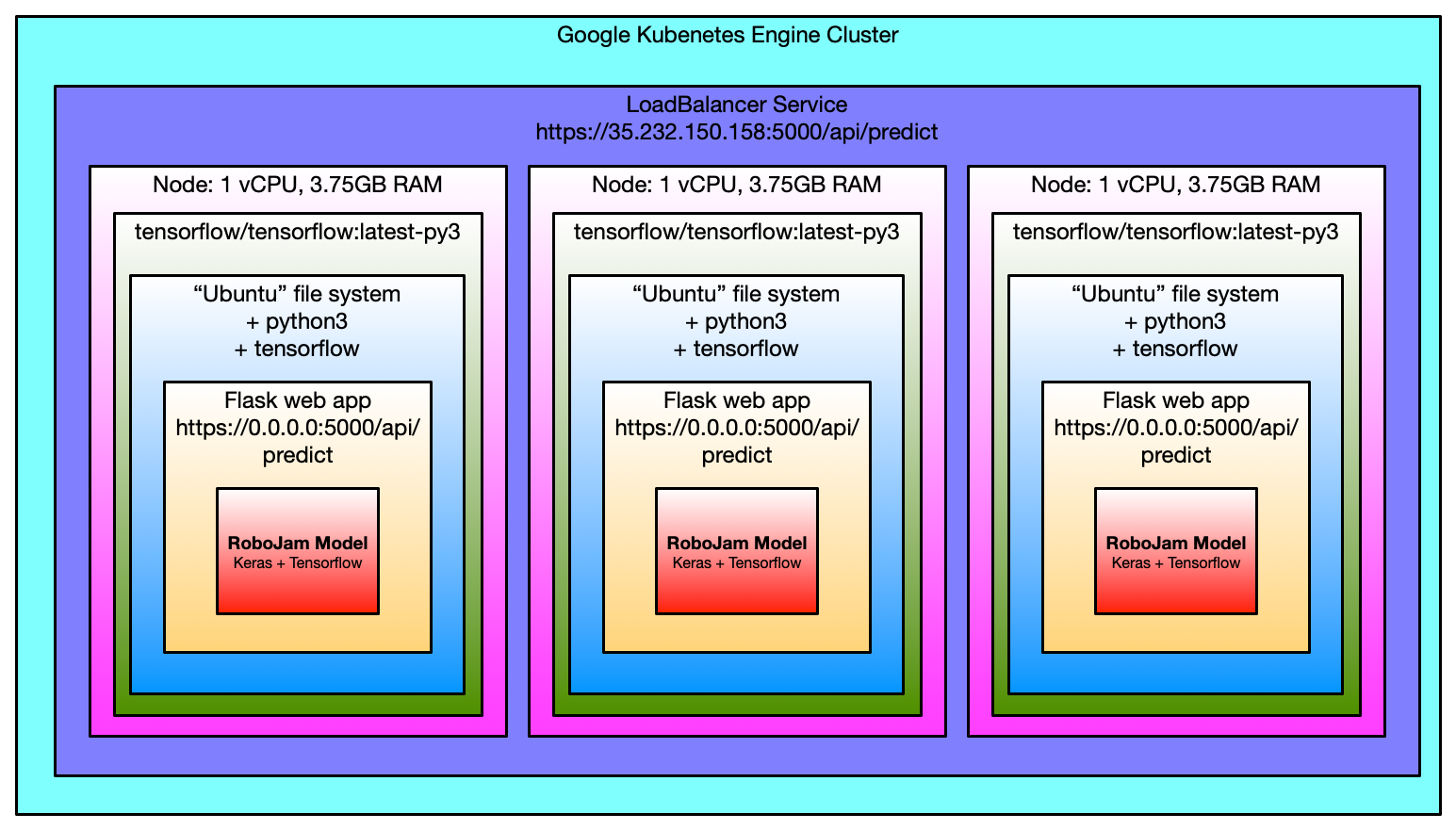

Deploying to Kubenetes

- Kubernetes is a system to run docker containers on multiple computers simultaneously.

- Let’s set up a little cluster on Google Cloud Platform and deploy Robojam.

- Need to set up computers through the web interface

- Then use command interface to start Robojam.

Deploying Robojam to Cluster

kubectl run robojam-cluster --image=charlepm/robojam:latest --port 5000

kubectl get pods

kubectl expose deployment robojam-cluster --type=LoadBalancer --port 5000 --target-port 5000

kubectl get service

Micro-Service Architecture with Kubernetes

Conclusion

- ML/AI isn’t just for research, we can make cool applications too!

- Cloud resources very available:

- Not too expensive to use powerful servers for short time (training)

- can do a lot with cheap servers for production

- Try out docker etc, makes life much easier.

- Try out Jupyterhub for development. Might be way of future?